Political scientists use experimental methods to study cause-and-effect relationships in politics. Sometimes these approaches involve exposing people to false information about their political reality. Matthew Barnfield argues that this practice of misinformation is not only unethical, but also an ineffective way of learning about the political world

A recent increase in the study of cause-and-effect relationships has seen political scientists adopt ‘experimental’ methods. By randomly assigning participants to receive some treatment, we can estimate the effect of that treatment on attitudes or behaviours. But in many cases, the way we arrive at these findings is both unethical and invalid.

Typically in political science, experimental treatments take the form of an informational intervention. Whether for convenience or for more principled reasons, political scientists often just make that information up. That is, we try to learn about how information affects political outcomes through misinformation – providing false information about the state of the world.

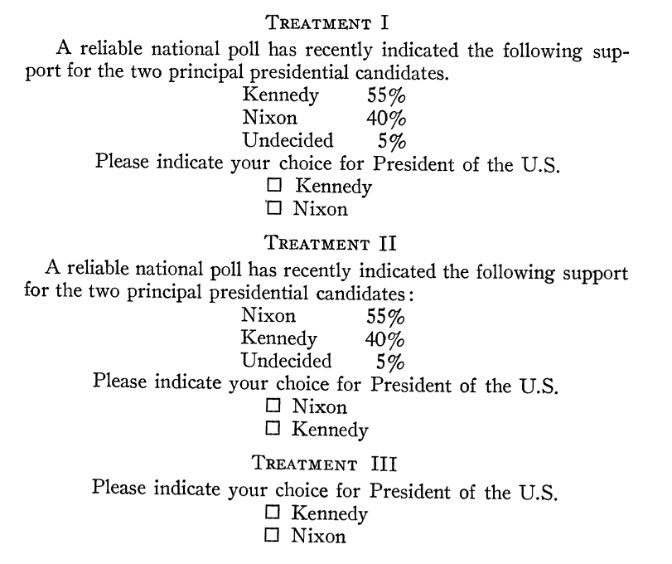

Take this example from the 1960s, back before experiments were especially common in political science:

Misinformation is a form of deception. What ethical considerations does that raise? And if misinformation is unethical, why does it remain such a widespread method?

How we express what is wrong with misinformation depends on our ethical theory. In academic discussions of the ethics of deception, one of two approaches is usually taken: deontological or utilitarian.

Deontological ethical theory originates with Immanuel Kant. He thought that people are ‘ends in themselves’ and therefore should not be treated as ‘mere means’ to anyone else’s ends. We should not deceive people into doing what we want them to do, because this violates their autonomy and dignity.

When we ask people to participate in a study, we are unlikely to tell them that during that study they will receive misinformation about politics. After all, we want them to believe the information is true. But if we did admit this, they might not consent to participate. So, when they agree to participate, they are deceived into doing so and we lack informed consent. We are treating them as means to our ends.

Misinformation breaches the informed consent of study participants, and shifts their political attitudes in ways that may persist after the study's end

Utilitarian approaches to research ethics are more concerned with weighing up the concrete costs of a research design against its potential benefits. There are many potential costs of misinformation. These include scientific costs that result from a loss of public trust in researchers, and political costs that result from having misinformed citizens with misguided political attitudes.

We can’t rely on so-called ‘debriefing’ to override these effects. Evidence shows that even when we correct political misinformation post-hoc, people’s political attitudes do not return to baseline.

Even so, what if the benefits of misinformation outweigh these costs? Misinformation affords researchers complete control over their experiment. The treatment can take whatever value we need it to take. If I am free to misinform, then I am free to use my study to estimate how people would vote if they learned that Nixon, and not Kennedy, led in the polls.

Unfortunately, under misinformation, such findings will tend to be invalid. Why? Because if I make up some misinformation about the political world, I can rarely assume with any certainty that everything else in the world could remain the same and that information be true. The world would have to change in other ways too. In most cases, those changes would also influence the outcome that the experiment is designed to measure.

We cannot assume that the world in which Nixon is leading by fifteen points is identical in every other sense to the world in which Kennedy is leading by fifteen points. What would account for this dramatic reversal of fortunes? There are bound to be some other differences between those worlds that produce such completely different preferences in the electorate.

A world in which any given piece of misinformation is true is very different from our own world...

For example, perhaps in the world in which Nixon has the lead, the incumbent Republicans had done an excellent job of managing the economy over the past four years. But if the Republicans had done an excellent job of managing the economy, then the participants who took the experiment would be more likely to vote Republican anyway. The outcome we are interested in – the number of respondents who vote Republican – would very likely differ from the one we actually measure.

So we cannot say that the effect we observe in the experiment is the effect that we would observe if the information really came true in the world.

But it gets worse yet.

When we introduce a treatment in an experiment, and there are other things that would need to be true for that treatment to be true, respondents often actually assume that those other things are true. These assumptions then affect the way people respond to the experiment. Research participants, in our example, could assume that the Republicans have been managing the economy well. Then, it is this perception of sound economic management that determines their vote choice.

...and participants often assume that the world is, indeed, different in this way and respond accordingly

If that is the case, then we are not even measuring the effect of the treatment at all.

If misinformation is unethical and bad at answering our questions about politics, then how can we do better? By telling the truth. Political scientists should use information that is true in the actual world as their experimental treatments.

This ‘true treatments’ method offers a way of ethically and validly estimating what would happen if people were exposed to some information, without having to make the questionable assumptions that come with also asking what would happen if that information came true. It already is true. We’re just measuring what happens when people find that out.

Experimentalists may object that limiting ourselves to telling the truth inevitably restricts the range of questions we can address with experiments. But only applying methods to questions they can reliably answer is no bad thing – especially when ethical issues are at stake.